The origin of Chat GPT

10 February 2023

Man invents, AI composes

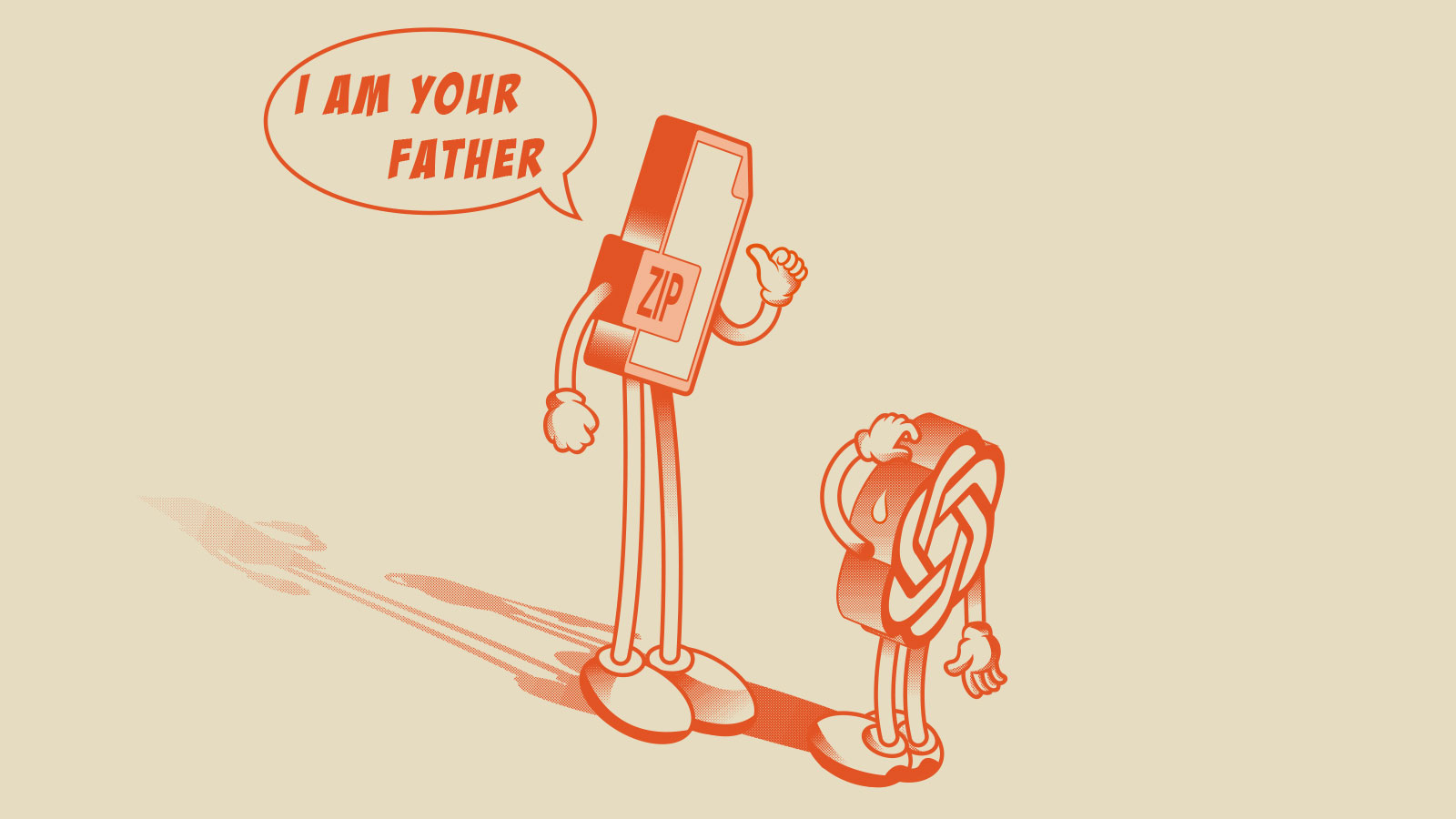

In 2013, workers at a German construction company discovered that printouts of a floor plan had area indications that differed from those described by the computer file. The company contacted a computer scientist, who discovered that the Xerox copier was using “lossy” compression, which means that some information is lost in the compression process. Lossy compression is typically used for photos, audio and video where accuracy is not critical, but for text files and computer programs, “lossless” compression is used to avoid errors. The Xerox copier considered labels with measurements on them to be similar, resulting in the indications on one label being transposed to all the figures. This incident highlights the potential dangers of models like OpenAI’s ChatGPT, because lossy compression can result in subtle but incorrect information.

ChatGPT is like a “lossy” compression of all information on the Web. It retains a lot of information but the exact words are not stored, so searching for an exact quote will not yield an exact match. The information is presented as grammatical text, but the model is subject to “hallucinations” or nonsensical answers to factual questions. These hallucinations are like compression artifacts and require comparison with the original information. Lossy compression algorithms use interpolation to estimate the missing information, which is similar to what ChatGPT does when generating text by taking two points in the “lexical space.” People find this funny because ChatGPT is so good at interpolating text that it’s like a “blurring” tool for paragraphs.

Large language models like ChatGPT can be thought of as lossy text compression algorithms, but this perspective minimizes their ability to understand text. They use statistical analysis to identify patterns in the text, but they are not perfect. They can answer simple arithmetic problems but fail at complex ones, and their answers to questions on other topics sometimes appear to have true comprehension, but are really just rephrasing. The illusion of understanding is created by the model’s ability to rephrase information, rather than simply regurgitate it word for word.

Large language models such as OpenAI’s ChatGPT can be evaluated on the basis of the analogy of a fuzzy JPEG. The quality of the text generated by these models is questionable and there is a need to eliminate fabrication and propaganda. Although these templates can generate web content, they may not be suitable for information seekers. The future GPT-4 model should have stricter training data, excluding the text generated by the previous models. Using these templates for original writing may not be appropriate because it lacks the essential experience of writing non-original content. To create original work, it is best to start from scratch and hone your writing skills by writing non-original content.

This article is a summary of Ted Chiang’s February 9 column in “The New Yorker.” (view article) The paradox? it was produced by ChatGPT. I invite you to discover the original column to evaluate the insight of this “lossy” compression!